Unleashing the Power of Testing: The Benefits and Pros/Cons

Testing plays a crucial and indispensable role in the realm of product development. It is an intricate process through which the overall quality, functionality, and performance of a product are evaluated meticulously. The overarching objective of testing is to ensure that the final product is capable of meeting customer expectations while adhering to various quality standards.

Firstly, testing serves as a means of uncovering bugs or defects within the product. By executing exhaustive tests under diverse scenarios, it exposes any issues or weaknesses present in the system. This allows for prompt identification and subsequent rectification of these issues, leading to enhanced product stability.

Additionally, testing not only measures the correctness and accuracy of a software solution but also verifies its compliance with intended requirements. By subjecting different functionalities and features to rigorous examination, it confirms whether they align with the desired specifications and perform as expected.

Furthermore, testing serves as an effective risk mitigation strategy. By simulating real-world usage scenarios, developers can assess the product's reliability and resilience under various conditions. Consequently, potential risks that may impact user experience or compromise data security can be identified early on, thereby ensuring an overall more robust and safe product.

Moreover, testing plays a pivotal role in establishing trust with both customers and stakeholders. By conducting thorough assessments, teams can assure their stakeholders that the product is reliable, consistent, and meets predefined quality standards. This instills confidence in the viability and effectiveness of the solution being developed.

In addition to bug detection and risk mitigation, comprehensive testing aids in optimizing performance by identifying bottlenecks or inefficiencies within the product. It enables organizations to fine-tune their offering to improve speed, responsiveness, scalability, and accessibility.

Another vital aspect where testing proves its worth is facilitating seamless integration among different components within a complex software ecosystem. Testing ensures compatibility among integrated modules or third-party systems by detecting any conflicts or inconsistencies that may hinder smooth operation.

Furthermore, testing contributes to both internal and external product documentation. By meticulously recording the various test cases and their outcomes, it builds a comprehensive repository of knowledge related to the product's behavior. This documentation enhances communication and collaboration among developers, testers, and other stakeholders involved.

Ultimately, testing significantly reduces the overall cost associated with product development. Through early detection and resolution of defects, it minimizes the need for expensive fixes when the software is already deployed. Therefore, investing in thorough and effective testing invariably leads to long-term cost savings.

In conclusion, testing plays an indispensable role in product development by ensuring quality, functionality, compliance with requirements, risk mitigation, optimization of performance, integration facilitation, documentation building, trust establishment, and cost reduction. By embracing a holistic approach to testing throughout the development cycle, organizations can deliver robust and reliable products that meet user expectations while standing out in competitive markets.

The Unseen Benefits of Rigorous Testing in Software ReleasesRigorous testing in software releases is an essential element that can provide numerous unseen benefits to the overall development process. By meticulously testing software before its release, developers can ensure a higher level of quality, reliability, and user satisfaction. Let's uncover the various advantages that come with putting software through rigorous testing.

The Unseen Benefits of Rigorous Testing in Software ReleasesRigorous testing in software releases is an essential element that can provide numerous unseen benefits to the overall development process. By meticulously testing software before its release, developers can ensure a higher level of quality, reliability, and user satisfaction. Let's uncover the various advantages that come with putting software through rigorous testing.Firstly, rigorous testing helps in identifying existing or potential glitches, bugs, or defects that may not be immediately visible to the naked eye. By running numerous tests across different scenarios and edge cases, testers can spot and rectify potential issues before the software reaches the end-users. This proactive approach ensures a smoother user experience and minimizes complaints or negative feedback.

Secondly, rigorous testing contributes to enhanced software stability and improved performance. Through a comprehensive testing process, developers get to assess the behavior and response time of applications under varying conditions. This allows them to identify bottlenecks or areas of weakness early on, making it easier to optimize performance and address any stability concerns efficiently.

Furthermore, rigorous testing facilitates better integration between different components or modules of software. It not only helps in ensuring that all elements work seamlessly together but also assists developers in spotting any inconsistencies or conflicts that might arise from integration. By conducting thorough tests during the development cycle, potential integration issues can be resolved promptly, thus reducing rework and improving overall efficiency.

In addition to identifying defects and performance issues, rigorous testing also boosts security measures within software. By simulating real-world scenarios and attempting to exploit vulnerabilities or weaknesses, testers can uncover potential security risks in the system. Identifying such vulnerabilities early on allows for appropriate safeguards to be implemented, thereby mitigating the risk of cybersecurity breaches.

Moreover, deploying rigorous testing practices promotes reliable compatibility across various platforms and devices. In today's multi-device world, where software needs to run seamlessly on different operating systems and devices with varying specifications, robust testing is crucial. It ensures that users can access and utilize the software effectively regardless of the device or platform they are using, consequently increasing overall user satisfaction and boosting business reputation.

Finally, rigorous software testing empowers developers to adhere to relevant industry standards and regulatory requirements. By thoroughly testing each aspect of the software, developers can verify compliance with necessary regulations, such as accessibility standards or data protection laws. Meeting these requirements not only avoids legal implications but also instills trust and confidence in the end-users.

In conclusion, while rigorous testing may seem like an additional step in the software development cycle, its advantages cannot be underestimated. Through meticulous testing practices, developers can identify and rectify defects early on, optimize performance, enhance security, ensure compatibility, comply with regulations, and achieve high customer satisfaction levels. By investing time and effort into rigorous testing, businesses can create more robust software releases that successfully meet user expectations while also reducing post-release issues and long-term costs.

How Continuous Testing Can Transform the Agile Development ProcessContinuous testing is an essential aspect of agile development that helps enhance overall efficiency and deliver high-quality software. By automating various testing processes throughout the software development lifecycle, continuous testing brings several benefits to the agile development process.

How Continuous Testing Can Transform the Agile Development ProcessContinuous testing is an essential aspect of agile development that helps enhance overall efficiency and deliver high-quality software. By automating various testing processes throughout the software development lifecycle, continuous testing brings several benefits to the agile development process.Firstly, continuous testing allows for faster feedback loops, ensuring that any issues or defects can be identified early on in the development cycle. This early detection helps developers rectify problems promptly, leading to higher-quality software and shorter release cycles.

Another advantage is that continuous testing facilitates better collaboration between developers, testers, and other stakeholders involved in the agile development process. With improved communication and integration among team members, organizations can achieve higher levels of synergy and productivity.

Moreover, the automation of testing enables frequent and consistent execution of test cases. This consistency allows for better tracking of software quality over time, identifying trends or patterns in defects, and making informed decisions based on objective data.

In addition, continuous testing assists developers in achieving a state of Continuous Integration (CI) and Continuous Delivery (CD). By continuously integrating code changes into a shared repository and conducting automatic tests at each integration point, CI ensures that conflicts are identified early on.

Furthermore, continuous testing plays a crucial role in maintaining the stability and reliability of the entire system. Continuous monitoring through automated tests helps detect regression errors that may occur due to changes or additions in the codebase. This capability ensures that new features or modifications do not inadvertently disrupt the existing functionality.

Furthermore, continuous testing increases test coverage by automating repetitive tests that would have traditionally been done manually. With an extensive range of automated tests in place, time-consuming regression tests become negligible. Thus, more thorough testing can be achieved within shorter spans, allowing efficient utilization of resources.

Moreover, continuous testing fosters a culture of quality within Agile teams. The automation framework encourages the adoption of best practices such as building effective test suites and using code quality metrics. Continuous monitoring not only provides valuable insights into improving software quality but also contributes to valuable retrospective analysis.

Additionally, continuous testing helps in reducing overall development costs. By strategically investing efforts in automation, organizations can eliminate the bottlenecks associated with manual testing activities. This reduction in effort and time requirements ultimately leads to cost savings and enables better resource allocation.

Lastly, continuous testing helps uncover latent defects that may go undetected during traditional testing practices. By subjecting software to continuous testing under a variety of scenarios, both expected and unexpected errors can be identified early on. This increased visibility of defects enables agile teams to deliver software with a higher degree of reliability and stability.

In conclusion, continuous testing is an integral component of the agile development process. Its automation-driven approach not only facilitates early error identification but also enhances collaboration, test coverage, system stability, and cost-effectiveness. Ultimately, proper implementation of continuous testing stands to transform how agility is achieved by boosting software quality, promoting better team dynamics, and enabling more frequent and reliable software releases.

Pros and Cons of Automated Testing: Is It the Future?Automated testing has become an indispensable aspect of the software development life cycle, aiding in identifying bugs and ensuring the delivery of quality products. While it has numerous advantages and is touted as the future of testing, some drawbacks cannot be ignored.

Pros and Cons of Automated Testing: Is It the Future?Automated testing has become an indispensable aspect of the software development life cycle, aiding in identifying bugs and ensuring the delivery of quality products. While it has numerous advantages and is touted as the future of testing, some drawbacks cannot be ignored.One significant advantage of automated testing is its ability to save time and improve efficiency. Manual testing can be time-consuming and error-prone, especially for complex software systems. By automating repetitive tasks, the testing process becomes much faster and less prone to human errors.

Automated tests also offer improved test coverage. They can execute a vast number of test cases in a short span of time, which is impractical or nearly impossible with manual testing alone. This extensive coverage helps in detecting more defects and vulnerabilities, resulting in higher-quality software.

Furthermore, automated tests empower developers to catch regressions quickly. After making changes or introducing new features, developers can rerun stored test scripts to check for any unintended side effects. This rapid feedback loop ensures that software updates won't break existing functionalities, maintaining robustness throughout the development cycle.

Additionally, automated testing increases test accuracy and repeatability. The tests are executed precisely as designed without variations caused by manual intervention or bias. This consistency enhances reliability in identifying genuine defects and reduces false positives or false negatives.

Moreover, automated tests promote better collaboration within development teams. With a standardized framework in place, testers and developers can work together seamlessly to enhance the test suite's scope and overall effectiveness. Regular communication regarding failures or issues allows for smoother rectification and promotes continuous integration practices.

Despite these commendable advantages, there are certain downsides associated with automated testing. First and foremost is the lack of human insight it brings during the testing process. Automation tools cannot simulate human intuition or decision-making capabilities in certain situations, meaning more complex scenarios may still require manual intervention.

Another disadvantage with automated testing is the initial setup cost. Establishing an effective automated testing infrastructure demands an investment in the right tools, frameworks, hardware, and skilled resources. The time and effort required for creating test scripts can also be substantial, especially during the early stages of automation implementation.

Maintenance of automated test suites can also pose challenges. As software systems evolve and functionalities change, test scripts need to be updated accordingly. This upkeep can become time-consuming and burdensome, especially for large-scale projects or when rapid development cycles are frequent.

Furthermore, automated tests may provide a false sense of security. Relying solely on automation might overlook certain aspects such as user experience anomalies or integration issues that might require human intuition or visual evaluation.

In conclusion, automated testing clearly offers significant advantages in terms of time-saving, efficiency improvements, and extensive test coverage. However, it is important to recognize the disadvantages such as initial setup cost, maintenance efforts and potential limitations in reproducing human cognitive abilities. As we constantly evolve in the software development field, automated testing is undeniably a crucial part with immense potential for continued growth.

The Psychological Impact of Testing on Development TeamsThe psychological impact of testing on development teams is a significant aspect that cannot be ignored. The demands of testing, especially in agile environments, can have both positive and negative effects on the individuals involved.

The Psychological Impact of Testing on Development TeamsThe psychological impact of testing on development teams is a significant aspect that cannot be ignored. The demands of testing, especially in agile environments, can have both positive and negative effects on the individuals involved.Testing exerts pressure on development teams to meet strict deadlines and deliver high-quality software. This contributes to a sense of urgency, which can be motivating for some team members. However, constant pressure may also lead to increased stress levels, anxiety, and potential burnout.

Impending deadlines may cause developers and testers to feel overwhelmed, rushed, or inadequate in meeting their objectives. This can create self-doubt and hinder their ability to perform optimally.

Additionally, developers often work in collaboration with testers during the testing process. Miscommunication or differences in opinions between these roles can generate tensions within the team. Testers might feel that their expertise is overlooked or undervalued, while developers may perceive constant scrutiny as an intrusion.

The repetitive nature of software testing might frustrate some individuals as they navigate through cycles of identifying, fixing, and retesting defects. Testing can be monotonous and mentally draining over time. Developers may experience dissatisfaction due to the constant effort required to address bugs identified by testers.

Moreover, observing various bugs and flaws in the software being developed can lead to morale depletion among the team members.†despite our best efforts" feeling prevails which can be demoralizing. Frequent bug fixes and delays caused by testing could potentially harm team morale and overall job satisfaction.

On the contrary, testing also provides beneficial psychological outcomes for development teams. Tester involvement helps identify errors before the software reaches end-users or production environments. By catching issues earlier in the development process, the team gains confidence in its ability to deliver a reliable product. This bolstered confidence instills a sense of achievement amongst team members as they strive towards improving software quality.

Effective reporting and feedback loops through testing help establish a culture of continuous improvement within the team. Test result analysis enables developers to learn from their mistakes, identify patterns, enhance coding practices, and implement preventive measures. In turn, this empowers the team through systematic growth and an increased sense of competence.

Furthermore, collaboration between developers and testers within the testing phase presents an opportunity for knowledge sharing and fostering healthy relationships. Open channels of communication can reduce friction and enrich the collective expertise of the team members.

The psychological impact of testing on development teams varies depending on multiple factors including individual personalities, management approaches, team dynamics, and organizational culture. Managers should proactively promote a supportive work environment that acknowledges the challenges faced by testers and developers, providing necessary resources, support systems, mentorship programs, or training opportunities to mitigate potential downsides.

Creating a balanced approach that recognizes both the positive and negative aspects associated with testing can help shape a conducive atmosphere for growth, job satisfaction, and ultimately facilitate the creation of top-notch software products.

Exploring the Cost-Benefit Analysis of Intensive Testing ProtocolsExploring the Cost-Benefit Analysis of Intensive testing Protocols

Exploring the Cost-Benefit Analysis of Intensive Testing ProtocolsExploring the Cost-Benefit Analysis of Intensive testing ProtocolsTesting is a critical component of any product development or software project. It enables a thorough evaluation and validation of the system to ensure it meets the desired standards and requirements. However, with the evolving complexity of modern technologies, the need for comprehensive testing protocols has increased exponentially. One such approach that has gained prominence is intensive testing protocols.

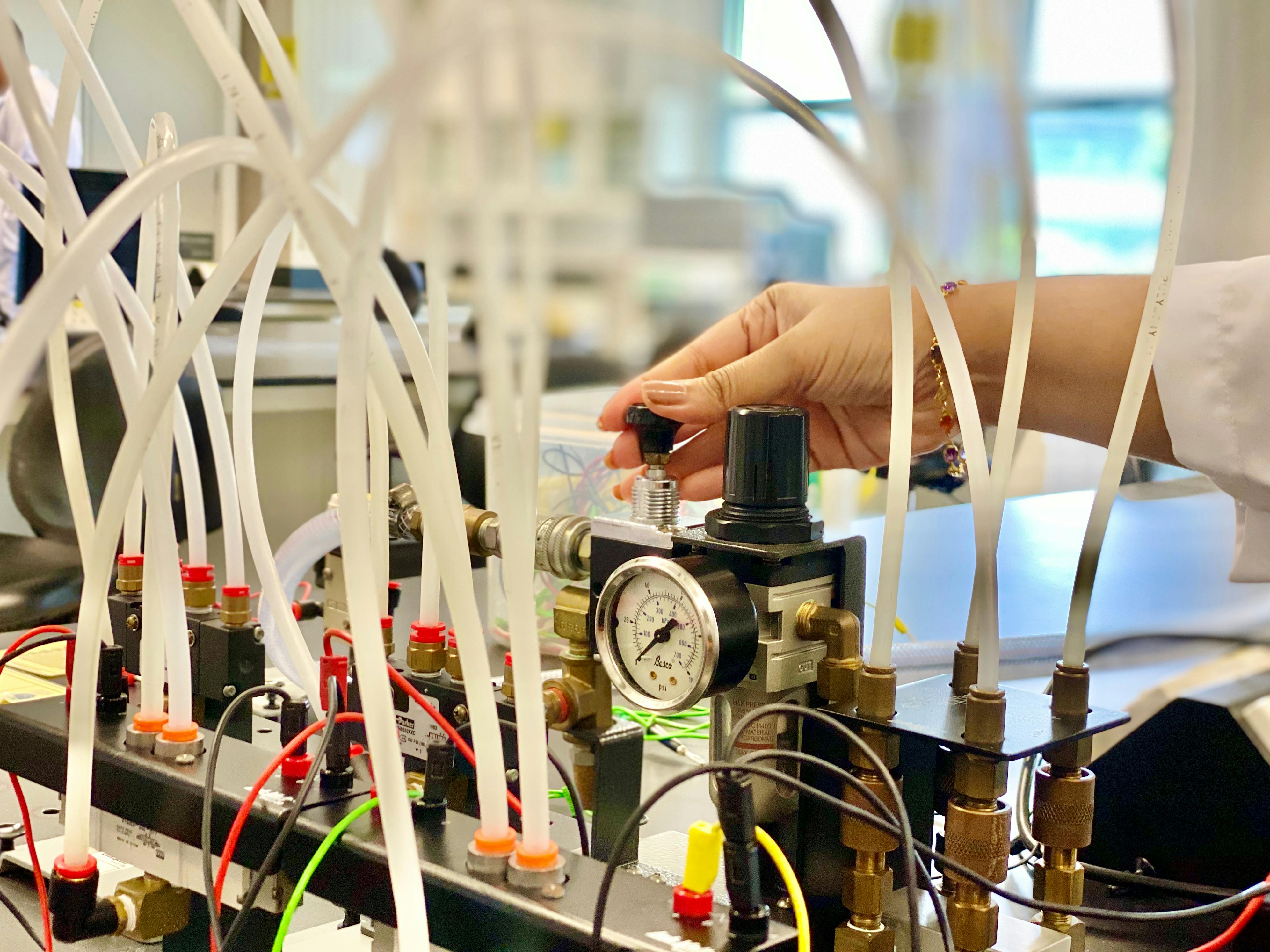

Intensive testing protocols involve rigorous and exhaustive testing methods aimed at uncovering even the subtlest defects or vulnerabilities within a system. These protocols typically include a mix of different testing techniques, such as unit testing, integration testing, system testing, performance testing, security testing, and more. By employing an intensive testing protocol, organizations strive to minimize the risk of potential failures in their systems and enhance the overall quality and reliability.

Like any other endeavor or strategy, conducting intense testing protocols incurs costs. These costs typically arise from various aspects of the testing process, such as hiring skilled testers or investing in sophisticated testing tools and infrastructure. Moreover, extensive testing requires more time and effort at different stages of development, which can lead to delays and impact project timelines.

In contrast to the costs incurred by intensive testing protocols, the potential benefits are equally substantial. Identifying and rectifying bugs or vulnerabilities during development helps prevent costly rework or revisions later in the project's lifecycle. Comprehensive testing also reduces the possibility of performance-related issues post-release, thus enhancing customer satisfaction and reducing support costs.

Furthermore, intensive testing protocols often contribute to improved system stability, ensuring minimal downtime and maintenance requirements over time. By performing exhaustive tests across various scenarios, teams gain deeper insights into system behavior, which ultimately leads to more robust and resilient products.

Nevertheless, a cost-benefit analysis becomes crucial when deciding whether to invest in intensive testing protocols for a specific project. Organizations must carefully evaluate the potential benefits against the associated costs. Factors such as project complexity, criticality of user requirements, budget constraints, and project timelines need to be considered beforehand.

The cost-benefit analysis helps establish whether the potential advantages of intensive testing protocols outweigh the financial, time, and resource investments. This evaluation plays a vital role in making informed decisions that align with the project's objectives and allow for effective risk mitigation strategies.

In conclusion, exploring the cost-benefit analysis of intensive testing protocols reveals the significant role they play in delivering high-quality systems. While costs are a natural consequence of implementing comprehensive testing approaches, the potential benefits in terms of reduced risks, improved stability, and enhanced customer satisfaction cannot be overlooked. A well-executed cost-benefit analysis assists organizations in determining the most suitable testing protocol for their specific projects, optimizing resource allocation to achieve desired outcomes.

Navigating the Trade-offs Between Speed and Thoroughness in TestingWhen it comes to testing, organizations often face the challenge of striking a delicate balance between speed and thoroughness. While thorough testing ensures high product quality, it can significantly slow down the development process. Alternatively, speedy testing helps in meeting tight deadlines but may compromise on the reliability and quality of the product. Therefore, navigating the trade-offs between speed and thoroughness becomes crucial in effective testing.

Navigating the Trade-offs Between Speed and Thoroughness in TestingWhen it comes to testing, organizations often face the challenge of striking a delicate balance between speed and thoroughness. While thorough testing ensures high product quality, it can significantly slow down the development process. Alternatively, speedy testing helps in meeting tight deadlines but may compromise on the reliability and quality of the product. Therefore, navigating the trade-offs between speed and thoroughness becomes crucial in effective testing. One key aspect is understanding the project requirements and context. By comprehending the project's objectives, stakeholders' expectations, and user needs, testers can better assess the right balance between speed and thoroughness. Contextual factors such as project complexity, time constraints, resource availability, and risk tolerance also play significant roles in determining the optimal approach.

Applying risk-based prioritization techniques is essential for making informed decisions. When faced with limited time or resources, prioritizing tests based on risks associated with specific functionalities or components helps optimize both speed and coverage. It involves identifying critical modules or aspects that have a higher likelihood of failure or would cause severe consequences if not thoroughly tested.

The effective usage of automation tools and frameworks aids in balancing speed and thoroughness. Automated tests help accelerate repetitive tasks, regression testing, and core functionality checks while freeing up human testers' time to focus on more complex scenarios. However, careful consideration should be given to ensure automated tests cover essential aspects; otherwise, important defects may be missed.

Adopting Agile methodologies like Scrum or Kanban can provide structures that assist in balancing speed and thoroughness. Splitting requirements into smaller testable increments allows parallel activities resulting in reduced feedback loops and faster releases while maintaining an adequate level of scrutiny for each increment.

Collaboration is vital while navigating trade-offs in testing. Close communication between developers, QA teams, project managers, and stakeholders facilitates shared understanding of priorities and ensures everyone works towards finding the right balance. This collaboration enables early identification and mitigation of risks or issues that may arise due to the chosen trade-off strategy.

Conducting regular retrospectives or post-mortems helps teams analyze their testing processes, identify bottlenecks, make adjustments, and continuously improve. Learning from past experiences enhances decision-making abilities when navigating the trade-offs and refining strategies over time, resulting in more efficient and effective testing practices.

Recognizing that finding an ideal balance between speed and thoroughness may not always be achievable is important. Real-world constraints, such as rigid deadlines or limited resources, may require making compromises. In such scenarios, it becomes essential to communicate these compromises to stakeholders and manage expectations accordingly.

In conclusion, successfully navigating the trade-offs between speed and thoroughness in testing requires a holistic approach that considers project requirements, utilizes prioritization techniques, harnesses automation where appropriate, adopts Agile methodologies, promotes collaboration, reflects on lessons learned through retrospectives, and accepts occasional compromises based on realistic constraints. Striking the right balance ensures that testing efforts are optimally directed towards providing high-quality software within given constraints.

The Critical Importance of Test Environments: Simulation vs. Real World When it comes to testing, whether it be software or various products, having the appropriate test environment is of critical importance. Selecting the right environment can significantly affect the accuracy and reliability of the test results obtained. The two main types of test environments commonly used are simulation and real-world environments.

The Critical Importance of Test Environments: Simulation vs. Real World When it comes to testing, whether it be software or various products, having the appropriate test environment is of critical importance. Selecting the right environment can significantly affect the accuracy and reliability of the test results obtained. The two main types of test environments commonly used are simulation and real-world environments. Simulation environments attempt to replicate real-world conditions through some sort of modeling or imitative system. They are designed to create an artificial setting that closely resembles the actual operating environment where the product will be utilized. Simulation environments often offer several advantages. Firstly, they provide a controlled setting where developers can rigorously test their products under specific conditions without affecting any live systems or disrupting ongoing operations. Moreover, simulation environments allow for the testing of extreme scenarios or rare events that are nearly impossible to encounter in real life, giving testers a comprehensive view of how the product performs in all possible situations.

However, despite all their perks, simulation environments have limitations. They might not capture every element present in the real world - small nuances and variables that can influence product behavior may be omitted or may not interact in precisely the same way as they would in reality. This lack of accuracy can limit the ability to predict real-world product performance reliably. There's also the risk of overlooking unforeseen circumstances that may only arise in live environments.

On the other hand, real-world test environments offer a more authentic representation of how a product will perform when put into practice. By directly testing within the true operating setting, developers can assess its compatibility with existing systems, potential vulnerabilities, as well as observe its behavior during regular usage patterns. Real-world testing provides valuable empirical evidence since it accounts for dynamic and unpredictable variables specific to live environments.

While real-world test environments aim to provide accurate insight into product operation, conducting tests within such settings can be time-consuming, complex, and costly. Availability of resources and potential interruptions due to interacting systems further complicate these efforts.

Deciding between simulation and real-world test environments often depends on various factors, including the complexity of the product being tested, potential risks involved, and access to necessary resources. In certain cases, it may be ideal to start with simulation environments during early stages of development, gradually transitioning into real-world settings as the product matures. This approach allows for iterative testing and optimization, ensuring that the final product performs as expected once deployed.

To summarize, striking a balance between simulation and real-world test environments is crucial for successful testing. Simulation environments are useful for controlled testing and understanding product behavior beyond typical scenarios, while real-world test environments provide authentic insights into how a product will operate in actual conditions. Employing a combination of both throughout the development process can help uncover potential issues early on and optimize the final product before release.

Breaking Down the Different Types of Testing: Unit, Integration, System, and AcceptanceBreaking Down the Different Types of testing: Unit, Integration, System, and Acceptance

Breaking Down the Different Types of Testing: Unit, Integration, System, and AcceptanceBreaking Down the Different Types of testing: Unit, Integration, System, and AcceptanceTesting plays a vital role in software development, ensuring that the final product meets the required expectations. It involves assessing various aspects of the software to identify defects, validate functionality, and guarantee overall quality. In this blog post, we will delve into the four primary types of testing employed in software development: unit testing, integration testing, system testing, and acceptance testing.

Unit Testing:

Unit testing examines individual components or units of code independently. It focuses on checking if these units work as intended and can be subsequently integrated within the system. The primary goal is to identify defects within each component early on, offering developers an opportunity to fix issues before progressing further. Typically, developers write comprehensive test cases using popular frameworks like JUnit or NUnit to automate unit testing.

Integration Testing:

Once individual units pass the unit testing stage, integration testing comes into play. It concentrates on verifying that these separate units function together correctly when integrated. Integration testing easily identifies issues that arise due to communication or data exchange between modules. It aims to catch bugs related to erroneous component interaction which may go undetected during unit testing.

System Testing:

After successfully conducting integration tests, it's essential to ensure that all functions integrate as a whole to create a coherent and robust system. System testing verifies whether the developed software meets the specified requirements and works without any glitches at the systemic level. Testers execute various scenarios and use cases pertaining to the entire software system to uncover errors that might have been missed during earlier stages. By simulating real-world usage scenarios, system testing provides a reliable indication of how well the software performs.

Acceptance Testing:

The last step in validating the software is acceptance testing. Its main purpose is to confirm if the application satisfies client requirements and appropriately functions in a real-world environment before deployment. Acceptance tests are often carried out collaboratively between testers and clients or end-users. This type of testing ensures that the software or system meets the desired business objectives and is ready for delivery.

By employing a combination of these testing types in the software development lifecycle, teams can enhance the likelihood of delivering high-quality products. Each type addresses a different aspect of software functionality, providing confidence that defects are discovered and addressed early on, thereby reducing overall development costs. Ultimately, implementing thorough and effective testing methodologies results in significantly improved software reliability, user satisfaction, and business success.

The Power of User Acceptance Testing in Validating Product Market FitUser Acceptance testing (UAT) serves a crucial role in the validation of product-market fit for software products. It enables organizations to engage real end-users and ensure that their needs are effectively addressed. UAT, which is usually conducted during the final stages of development, offers valuable insights that aid in fine-tuning the product before its release. Here's a comprehensive overview of the power of user acceptance testing in validating product-market fit.

The Power of User Acceptance Testing in Validating Product Market FitUser Acceptance testing (UAT) serves a crucial role in the validation of product-market fit for software products. It enables organizations to engage real end-users and ensure that their needs are effectively addressed. UAT, which is usually conducted during the final stages of development, offers valuable insights that aid in fine-tuning the product before its release. Here's a comprehensive overview of the power of user acceptance testing in validating product-market fit.Firstly, UAT facilitates direct involvement and feedback from end-users who represent the target market demographic. This involvement helps evaluate the software's usability, functionality, and overall user experience. By observing how users interact with the product, potential pain points or areas for improvement can be recognized before making it available to a broader user base. Moreover, it enables gathering opinions and suggestions directly from intended users, ensuring that their expectations are met.

Through UAT, issues related to system integration compatibility can be exposed early on, prior to full release. By immersing representative users into simulated real-life scenarios supported by the software, organizations can capture how the product fits within existing IT ecosystems or interactions with other software systems. It also aids in uncovering any misuse or other unintended impacts that may arise when interfacing between different applications or environments.

Furthermore, UAT helps identify inconsistencies or disparities between the software and user requirements. Companies often invest substantial resources in market research to understand consumer preferences and ensure their software aligns with market demands. UAT acts as a bridge between development teams and users, allowing evaluators to assess whether the solution accurately corresponds to initial expectations.

Arguably one key advantage of UAT lies in supporting defect recognition at a granular level. Testers replicate operational scenarios encountered during real-world usage that were previously detected through a series of tests like unit testing and system testing. The primary goal is to uncover hidden defects that only users executing various tasks can discover. These identified issues provide developers with vital data for bug fixing, improving usability, and enhancing overall user satisfaction.

During UAT, stress testing and scalability assessments are also performed. By simulating a significant number of concurrent user requests or excessive system loads, organizations can determine how well their software performs under peak usage conditions. Understanding the software's limitations in terms of the number of users it can effectively handle allows companies to plan for necessary adjustments before shelfing the product into marketplaces.

Lastly, UAT affects the time to market for software products positively. Including end-users during development provides rapid feedback loops which help save significant resources that would be otherwise invested in troubleshooting after release. Rapid prototyping and Agile approaches often advocate for early involvement of intended users so that changes and iterations occur swiftly, consequently minimizing lead times and accelerating deployments.

In conclusion, UAT holds significant power in validating product-market fit. By actively involving end-users and gathering their valuable feedback prior to release, organizations can ensure their software aligns with user preferences, meets expectations, and functions seamlessly within different environments. Addressing detected issues at an early stage translates into higher levels of customer satisfaction, increased competitiveness, support for market-oriented decision-making, and overall success in today's rapidly evolving technology-driven markets.

Security Testing: Proactively Protecting Your Applications Against Cyber ThreatsSecurity testing is a paramount aspect of software development. It involves the process of evaluating applications and systems to identify vulnerabilities or potential risks that could be exploited by cyber threats. It aims to safeguard sensitive data, ensure confidentiality, integrity, and availability, whilst mitigating various security risks.

Security Testing: Proactively Protecting Your Applications Against Cyber ThreatsSecurity testing is a paramount aspect of software development. It involves the process of evaluating applications and systems to identify vulnerabilities or potential risks that could be exploited by cyber threats. It aims to safeguard sensitive data, ensure confidentiality, integrity, and availability, whilst mitigating various security risks.To proactively protect your applications against cyber threats, several security testing techniques and practices are employed.

Firstly, penetration testing (pen testing) is commonly used. This involves simulating an attacker who tries to exploit vulnerabilities or gain unauthorized access to the system. By conducting simulated attacks, experts aim to uncover existing weaknesses and provide recommendations for enhancing the system's security posture.

Next, vulnerability scanning plays a significant role in security testing. Conducted through automated tools or services, it scans networks or applications for known vulnerabilities. The results highlight potential weaknesses that need prompt attention.

Secure coding practices are crucial when it comes to building secure applications. Developers should adhere to the best coding practices and design principles to prevent common security weaknesses like SQL injections, cross-site scripting (XSS), and buffer overflows. Secure code reviews and static code analysis can assist in detecting such flaws.

Security assessment requires a thorough analysis of an application's architecture and design. Evaluating the system's overall design helps identify potential security gaps or misconfigurations that could leave it susceptible to cyber attacks.

Another essential aspect is identity management and access control. Security testing verifies if proper authentication mechanisms are implemented, ensuring only authorized users gain access. Testing scenarios may include password cracking attempts or bypassing authentication mechanisms.

Encryption plays a vital role in securing sensitive data during communication or storage. Security testing evaluates encryption methodologies like SSL/TLS protocols, ensuring data remains protected against eavesdropping or unauthorized access.

Mobile application security testing specifically analyzes the security aspects of mobile applications. Techniques such as reverse engineering are employed to dissect the application's code and identify vulnerabilities or weaknesses unique to mobile platforms.

Moreover, security testing encompasses network security assessments and firewalls. It verifies if the applications adequately respond to network-based attacks, such as Denial of Service (DoS) attacks, by actively testing the system's resistance against such threats.

Lastly, social engineering testing is designed to evaluate the human component in an organization's security framework. Through simulated phishing emails or physical intrusion attempts, it aims to gauge employees' awareness and responsiveness to potential threats.

Effective security testing goes beyond just discovering vulnerabilities - it requires strategies for remediation. Comprehensive reports detailing vulnerabilities, their criticality, and recommendations for mitigation should be provided to developers and management, ensuring all identified issues are adequately addressed.

Ultimately, continuous security testing is key to safeguarding applications and systems from evolving cyber threats. By adopting appropriate measures and regularly assessing vulnerabilities, organizations can mitigate risks and maintain a proactive security stance.

Performance Testing: Ensuring Your Application Can ScalePerformance testing is an essential component of ensuring that your application can effectively handle increased user loads without encountering performance issues. It focuses on assessing the responsiveness, speed, stability, reliability, and scalability of an application under different set load conditions.

Performance Testing: Ensuring Your Application Can ScalePerformance testing is an essential component of ensuring that your application can effectively handle increased user loads without encountering performance issues. It focuses on assessing the responsiveness, speed, stability, reliability, and scalability of an application under different set load conditions.The primary objective of performance testing is to identify crucial bottlenecks and weaknesses within the application, helping developers and stakeholders gain a better insight into the application's overall capabilities. By analyzing these aspects proactively, performance testing allows for necessary optimizations and improvements to be implemented, ensuring a seamless user experience even during peak traffic periods.

Various types of performance testing methodologies are commonly employed in the software development life cycle:

1. Load Testing: This technique focuses on evaluating how an application performs under usual circumstances. It aims to emulate real-world usage scenarios by subjecting the system to its expected workload, monitoring key metrics such as response times, resource utilization, throughput, and concurrent user handling capacity.

2. Stress Testing: Stress testing involves intentionally pushing the system beyond its normal operating conditions to determine its breaking point. By progressively increasing user load or data volume, developers can gauge how the application would behave during extreme scenarios and potentially identify issues such as memory leaks or crashing points.

3. Soak Testing: Also known as endurance testing, it assesses the application's stability over an extended period by subjecting it to a sustained, high load intensity. It helps detect potential memory leaks or degradation of performance due to continuous usage or limited system resources.

4. Spike Testing: Spike testing evaluates an application's ability to handle sudden and significant spikes in user loads. By simulating rapid fluctuations in concurrent users or transaction volumes, this test can identify weaknesses that may cause performance degradation at peak times.

5. Scalability Testing: Scalability testing investigates how well an application can handle expanding user loads and growing environments. It assesses an application's ability to scale up or down smoothly and efficiently while addressing performance limitations associated with processes, databases, network infrastructure, or other components.

It is crucial to comprehend the intricacies of performance testing in order to ensure accurate results and address possible vulnerabilities efficiently. This may involve leveraging various testing tools, monitoring extensive metrics, tweaking configurations, and conducting thorough analysis afterward. Therefore, integrating performance testing at multiple stages within the software development life cycle becomes essential to catch and address potential bottlenecks early on.

By conducting effective performance testing for your application, you can mitigate risks related to errors, crashes, poor load handling, or inadequate response times. Ultimately, it results in a highly scalable application that meets user expectations regardless of heavy incoming traffic or increased workload, leading to improved customer satisfaction, greater reliability, and better overall business success.

Examining the Impact of AI and Machine Learning on Future Testing Strategies The increasingly prevalent presence of artificial intelligence (AI) and machine learning (ML) in our lives has undoubtedly left a profound impact on various industries and sectors. testing strategies, in particular, have experienced a considerable transformation due to these cutting-edge technologies. In this blog, we delve into the subject of examining the impact that AI and ML have on future testing strategies.

Examining the Impact of AI and Machine Learning on Future Testing Strategies The increasingly prevalent presence of artificial intelligence (AI) and machine learning (ML) in our lives has undoubtedly left a profound impact on various industries and sectors. testing strategies, in particular, have experienced a considerable transformation due to these cutting-edge technologies. In this blog, we delve into the subject of examining the impact that AI and ML have on future testing strategies.First and foremost, AI and ML offer tremendous potential in enhancing the efficiency and effectiveness of testing. Automation plays a pivotal role here, as these technologies facilitate the creation of intelligent systems capable of executing repetitive tasks autonomously. By employing AI-powered algorithms, computer programs can independently derive insights from vast amounts of data, making testing significantly faster and more accurate compared to manual methods.

Regression testing is one area where AI and ML shine bright. Through the application of sophisticated algorithms, machines can quickly identify bug-related patterns and generate comprehensive test scripts. Moreover, continuous integration and continuous testing (CI/CT) pipelines can be seamlessly integrated by leveraging AI and ML, ensuring that software functions optimally across various iterations without hampering agility.

Machine learning algorithms are adept at training models to recognize bugs or vulnerabilities within software applications. Their ability to analyze large datasets enables them to detect diverse flaws, such as security vulnerabilities or performance issues, which may overlook human testers or require extensive time investment. This subsequently allows for early identification and rectification of problems before they affect end-users.

Beyond streamlining processes and increasing detection capabilities, AI and ML also enable the analysis and optimizations of testing practices based on historical data. Given their capacity to mine meaningful correlations from this data, testing teams can extrapolate valuable insights regarding defect patterns, test coverage adequacy, and optimal resource allocation for different types of software projects. Consequently, organizations can make informed decisions about allocating resources appropriately during future testing efforts.

With AI-driven predictive analytics virtually at their fingertips, quality assurance teams can better forecast potential risks connected to a project's timelines, scopes, and deliverables. Leveraging these advanced analytical capabilities helps mitigating setbacks and adjusting testing strategies accordingly, fostering superior project management practices.

Additionally, AI and ML facilitate the development of intelligent bots that mimic human-like behavior to perform specific testing tasks. This not only reduces mundane workloads but also allows for parallel execution and the possibility to test multiple scenarios simultaneously. By utilizing bots to simulate user behavior and evaluate software functions against predefined criteria, developers and testers alike gain invaluable insights into potential usability issues or performance limitations.

However, it is essential to remain cognizant of potential challenges accompanying the integration of AI and ML into testing strategies. For example, ethical concerns arise when automation replace manual jobs, potentially leading to workforce displacement and job insecurities. Maintaining a balance between leveraging cutting-edge technologies and preserving employment opportunities is crucial to ensure just implementation practices.

In conclusion, the impact of AI and ML on future testing strategies is profound and far-reaching. From process optimization through automation to advanced defect detection and predictive analytics capabilities, these technologies revolutionize how we approach software testing efforts. Nonetheless, gaining a comprehensive understanding of potential challenges associated with their adoption remains imperative. Embracing these technological advancements responsibly undoubtedly holds the key to unlocking enhanced efficiency, agility, cost-effectiveness, and quality in testing processes across industries.

Overcoming Resistance to Change: Integrating Innovative Testing Approaches in Traditional Teams Resistance to change is a common phenomenon within traditional teams when it comes to implementing innovative testing approaches. The need to overcome this resistance becomes crucial for such teams to adapt and flourish in today's fast-paced and dynamic work environments.

Overcoming Resistance to Change: Integrating Innovative Testing Approaches in Traditional Teams Resistance to change is a common phenomenon within traditional teams when it comes to implementing innovative testing approaches. The need to overcome this resistance becomes crucial for such teams to adapt and flourish in today's fast-paced and dynamic work environments.Understanding the nature of resistance is an essential first step. People tend to resist change due to fear of the unknown, a preference for the status quo, or concerns around job security and competence. Traditional teams often have a sense of comfort and familiarity with their existing workflow, making it challenging for them to embrace new testing approaches.

To address this resistance and successfully integrate innovative testing techniques, the following strategies can be effective:

1. Communication: Communicating the benefits of the proposed changes is crucial. Explaining how these innovative approaches can help identify defects more efficiently, improve overall quality, and save time can alleviate concerns related to their relevance and importance. Openly discussing any doubts or anxieties that team members may have is equally important in fostering understanding.

2. Collaboration: Encouraging team collaboration during the planning and implementation phases can play a significant role in reducing resistance to change. Involving team members early on in decision-making processes empowers them to take ownership and contribute effectively. Effective collaboration creates a sense of shared responsibility for driving positive outcomes.

3. Training and education: Offering comprehensive training programs on the new testing approaches ensures that team members feel equipped to handle any challenges that may arise. Providing opportunities for learning new skills and techniques helps individuals gain confidence, reduces anxiety, and prepares them for adopting innovative methods.

4. Gradual implementation: Introducing changes in a phased manner rather than all at once can minimize resistance among traditional teams. Gradual integration helps focus on one aspect of testing at a time, allowing individuals to adapt slowly without feeling overwhelmed or threatened by extensive alterations to their existing practices.

5. Leadership support: Leaders play a critical role in overcoming resistance to change. Their active involvement, support, and advocacy serve as a guiding light during the integration process. Leaders need to address concerns, provide continuous feedback, and offer reassurances to demonstrate their commitment to the team's success.

6. Acknowledge and empathize: It is crucial to acknowledge that resistance is normal and valid. Each team member may experience this in varying degrees and for different reasons. By empathizing with their concerns, frustrations, or doubts, one can develop a deeper understanding of the resistance and work towards finding solutions.

7. Celebrate small wins: Recognizing and celebrating incremental achievements can instill motivation and enthusiasm among team members. When they see the positive impact of integrated approaches in action, the resistance diminishes, making it easier for them to adapt fully.

By implementing these strategies, traditional teams can gradually break through resistant mindsets, integrate innovative testing approaches smoothly, and foster a culture that embraces change. Ultimately, this enables organizations to stay at the forefront of technological advancements while maintaining high-quality software products and services.

When Too Much Testing Is Just Too Much: Finding the Right BalanceWhen it comes to testing, finding the right balance is crucial. While testing is an important aspect of any development process, too much testing can become excessive and hinder progress. So how do we strike that balance and ensure that testing is effective without becoming overwhelming?

When Too Much Testing Is Just Too Much: Finding the Right BalanceWhen it comes to testing, finding the right balance is crucial. While testing is an important aspect of any development process, too much testing can become excessive and hinder progress. So how do we strike that balance and ensure that testing is effective without becoming overwhelming?Firstly, it's important to understand why testing is necessary. Testing helps identify bugs, vulnerabilities, and performance issues within the software or product being developed. It gives developers a comprehensive view of the system's behavior under different conditions, allowing them to make necessary improvements and deliver a reliable end product.

However, conducting excessive tests can significantly slow down the development process. When there are too many tests involved, it results in a prolonged feedback loop which delays the overall project timeline. This delay can be cumbersome and may negatively impact both customers and stakeholders waiting for the final product.

Additionally, extensively testing every minute aspect of a system can lead to diminishing returns. Not all features or components carry the same level of importance. By focusing on extensive testing without considering the relative importance, valuable resources may get invested inefficiently.

One way to achieve the right balance is by prioritizing which aspects of the system require thorough testing. By identifying critical functionalities, risky areas prone to bugs, and commonly used features – testers can optimize their efforts for maximum impact.

Another factor to consider when deciding on the appropriate amount of testing is risk assessment. Estimating the potential risks associated with a software failure can help prioritize testing efforts accordingly. Higher-risk features or those that could cause significant damage upon failure should receive more attention during the testing phase than lower-risk elements.

Moreover, involving different team members throughout the development process can bring diverse perspectives on adequate testing levels. Collaboration between developers, QA engineers, designers, and business analysts can provide valuable insights into where additional tests might be needed or where they can be minimized.

Automation plays a critical role in finding the sweet spot as well. Automated tests greatly reduce manual effort and allow for faster and more frequent iterations. Investing in automated testing frameworks can create an efficient feedback loop that ensures wider test coverage within shorter time spans, without overwhelming the development process.

Lastly, establishing clear testing goals and success criteria is crucial. This helps define when sufficient testing has been performed and when it's appropriate to move forward in the development cycle. Based on project constraints, deadlines, and specific requirements, the team can agree on achievable testing goals to avoid excessive or inadequate testing practices.

Finding the right balance in testing is an ongoing process that relies on continuous evaluation and adjustment. It is important to strike a balance between comprehensive coverage and avoiding excessive testing efforts that impede progress. By understanding priorities, assessing risks, leveraging automation, involving various stakeholders, and setting clear goals – testers can ensure effective testing without going overboard.